Supervised Learning — Linear Regression (Using R)

Problem Statement:- Generate a proper 2-D data set of N points. Split the data set into the Training Data set and Test Data set. i) Perform linear regression analysis with Least Squares Method. ii) Plot the graphs for Training MSE and Test MSE and comment on Curve Fitting and Generalization Error. iii) Verify the Effect of Data Set Size and Bias-Variance Tradeoff. iv) Apply Cross-Validation and plot the graphs for errors. v) Apply the Subset Selection Method and plot the graphs for errors. vi) Describe your findings in each case.

Link to the program and Datasets is given below

What is a Linear Regression?

Linear Regression is basically a statistical modeling technique. But it is widely used in machine learning as well. In linear regression, we try to predict some output values (y) which are scalar values with the help of some input values (x).

We call the input variables as independent variables as well. This is because these values do not depend on any other values. In the same sense, we call the output scalar values as dependent variables. This is because to find these output scalar values, we depend on the input variables.

Mathematical Representation of Linear Regression

To know the representation of linear regression, we have to consider both the mathematical and machine learning aspects of the problem. But this is going to be really easy to understand, there is nothing fancy about it.

Say, we have a collection of labeled examples,

(xi,yi)Ni=1

where N is the size or length of the dataset, xi is the feature vector from i=1,…, N, and Yi is the real-valued target. For more clarity, you can call the collection of xi as the independent variables and the collection of Yi as the dependent or target variables.

I hope that by now you are somewhat clear on how these notations are used in machine learning. If we simplify the above into words, then we can say that:

We want to build a linear model with the combination of features in x and predict the scalar values in y.

When we consider a simple linear regression, that is, a single x and a single y, then we can write,

y=β0+β1x

where β0 is the intercept parameter and β1 is the slope parameter.

If you analyze the above equation, then you may find that it is the same as the equation of a line:

y=mx+c

where m is the slope and c is the y-intercept.

For now, we just need to keep in mind that the above equations are for single-valued x. We will again come back to these to learn how actually they are used in machine learning. First, let’s discuss what is simple linear regression and what is multiple linear regression.

Simple Linear Regression

We know that in linear regression we need to find out the dependent variables (y) by using the independent variables (x).

In simple linear regression, for each observation i=1,2,…,N, there is only one xi and one yi. This is the simplest form of linear regression.

Multiple Linear Regression

In multiple linear regression, we have two or more independent variables (x) and one dependent variable (y). So, instead of a single feature column, we have multiple feature columns and a target column.

Mean Squared Error Cost Function

MSE is the average squared difference between the Yi values that we have from the labeled set and the predicted y new values. We have to minimize this average squared difference to get a good regression model on the given data.

The following is the formula for MSE:

MSE=1N∑(yi–(mxi+b))2

In the above equation, N is the number of labeled examples in the training dataset, yi is the target value in the dataset, and (mxi+b) is the predicted value of the target or you can say y new.

Minimizing the above cost function will mean finding the new target values as close as possible to the training target values. This will ensure that the linear model that we find will also lie close to the training examples.

To minimize the above cost function, we have to find the optimized values for the two parameters m and b. For that, we use the Gradient Descent method to find the partial derivatives with respect to m and b. In that case, the cost function looks something like the following:

f(m,b)=1N∑i=1n(yi–(mxi+b))2

Next, we use the chain rule to find the partial derivative of the above function. To solve the problem, we move through the data using updated values of slope and intercept and try to reduce the cost function as we do so. This part is purely mathematical and the machine learning implementation is obviously through coding.

Program for Linear Regression using Advertisement Dataset-

Step 1: Load the required dataset.

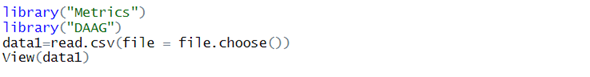

Input:-

We can see in the above code how the dataset can be imported in RStudio. After importing of dataset is successful, we can view the dataset. The below image shows the output of the above code.

Output:-

Step 2: Extracting the required data.

Input:

Here the data1 consists of the information we need to work on from the main dataset.

Output:

Step 3: Dividing the dataset into Training and Testing Datasets.

Input:

Here the values 1:140 are extracted for training purposes while the values from 141:200 are extracted for testing purposes.

Output:

Step 4: Binding all the columns using cbind function.

Input:

lm is used to fit linear models. It can be used to carry out regression, single stratum analysis of variance and analysis of covariance.

Models for lm are specified symbolically. A typical model has the form response ~ terms where response is the (numeric) response vector and terms is a series of terms which specifies a linear predictor for response. A terms specification of the form first + second indicates all the terms in first together with all the terms in second with duplicates removed. A specification of the form first:second indicates the set of terms obtained by taking the interactions of all terms in first with all terms in second. The specification first*second indicates the cross of first and second. This is the same as first + second + first:second.

Output:

Step 5: Plotting the graph for individual object.

Input:

Output:

Step 6: Predicting the values of data.

Input:

Output:

Step 7: Finally plotting a model for the training and testing datasets

Input:

Output:

Click here to download the Program and Datasets…

About Us

Thinksprout Infotech is leading IT Solutions company providing excellent services with great efforts. The Company also deals in Online Application and Custom Software Development. Moreover, we have an extensively experienced team in programming databases and back-end solutions. In the same vein, we develop user-friendly applications for our clients for better operations and outputs.

Partnered with

About Us

Thinksprout Infotech is leading IT Solutions company providing excellent services with great efforts. The Company also deals in Online Application and Custom Software Development. Moreover, we have an extensively experienced team in programming databases and back-end solutions. In the same vein, we develop user-friendly applications for our clients for better operations and outputs.